Education

11 Things You Need To Know About Data-Driven Testing

Published

1 year agoon

By

techonpc

Things You Need To Know About Data-Driven Testing

The traditional playback-type automation testing has been criticized for being inflexible and difficult to extend. A methodology known as Data-Driven Testing was developed in response to this argument.

Data-driven testing, put simply, is the process of parameterizing a test. After this, you then run it with different data. As a result, the coverage of a single test may be increased by running the same test case with a wide range of different inputs. Data-driven testing enables the opportunity to combine both positive as well as negative test cases. In addition to this, you can also enhance test coverage.

Let’s assume that our employer has given us a spreadsheet with a ton of data on it, including a variety of users, the items they plan to purchase, the quantities of those purchases, and other information. All of the data must be input into our system. If we had to create a distinct test case for each and every line in this spreadsheet, it would be incredibly annoying.

But we can pull data from a spreadsheet and feed it into our application thanks to our data-driven testing capabilities. We simply need to construct our test case once that way. And when necessary, we may add more test cases to our data source, such as this Excel file.

11 Things You Need To Know About Data-Driven Testing

This section of the blog focuses on the list of 11 things, that we think that you must know about data-driven testing.

- Data-Driven testing is a form of software testing technique, or more precisely, a strategy for the construction of automated tests that involves writing test scripts and reading data from data files.

Data pools, Excel files, CSV files, ADO objects, ODBC sources, and other types of files are often involved in this sort of data file. The test scripts are written by the testing functions, who also read the test data and output values from the data store.

- Visualize a situation where you must automate a test for a program that has several input fields. Normally, except in certain cases, you would hardcode those inputs before running the test. But at scale, hard coding will fall short. When you have to go through several combinations of permissible input values for worst-case, best-case, positive, and negative test situations, hardcoding inputs will rapidly become complicated, complex, and difficult to manage. You might design the test to “read” the input values from a single spreadsheet if all test input data could be recorded or saved there. That is what data-driven testing specifically aims to do.

By separating test data (input values) and test logic (script), DDT makes it simpler to generate, change, utilize, and scale both. Therefore, DDT is a technique where a series of test steps are automated to run repeatedly for various permutations of data so that you can compare actual and anticipated outcomes for validations. These test steps are arranged in test scripts as reusable test logic.

- Data-driven testing may be divided roughly into the following three categories:

- Automated testing driven by keywords: Table-driven test automation is another name for keyword-driven test automation.

- Data-driven scripts: Application-specific scripts (like JavaScript) that are written to accommodate changeable data sets are known as data-driven scripts.

- Hybrid Test Automation: In keyword Driven test automation, a data table with a keyword id is deployed for testing. It combines data-driven automation frameworks with keyword-driven automation frameworks.

- The main component of regression testing is a collection of test cases that are prompted to execute at each build. This is done if you want to make sure that the most recent version of the program does not break any features that were previously functioning. DDT (data-driven testing) expedites this procedure. Regression tests may be done for a whole workflow for several data sets since DDT uses numerous sets of data values for testing.

- It establishes a distinct and logical division between the test scripts and the test data. To put simply, you do not need to change the test cases repeatedly for various test input data sets. It is possible to reuse both variables (test data) and logic (test scripts) by separating them. Both the test script and the test data are independent of one another. Without changing a test case, a tester may add more test data if they so want. Similar to this, a programmer may alter the code in the test script without worrying about the test data.

- Next thing that you must you follow in order to make data-driven testing as effective as it can be is testing with positive and negative data. Everyone does positive and negative testing, and both are equally crucial. The effectiveness of a system is determined by its capacity to manage exceptions. These exceptions may happen as a result of a worst-case situation that the system has sometimes recreated. These exceptions should be effectively handled by an effective mechanism. It is crucial to use driving dynamic assertions that update the pre-test data and circumstances. Verifications become more important during code updates and new releases. It is also critical to have automated scripts that will supplement these dynamic assertions at this point, i.e., add previously tested components to the current test lines.

Teams still often use manual actions to start an automated process. Reduce this to a minimum. A manual trigger will not be an effective approach to test a navigational flow, therefore when we have a workflow with several navigational or re-directional pathways, it is better to design a test script that can support all of this. As a result, it is usually recommended to incorporate the test-flow navigation directly into the test script.

You must also know that perspectives should also be taken into account! This testing procedure is more analytical than logical. You can run simple tests in order to prevent a break or an exception that is predicted anywhere in the process if you are interested in verifying the workflow. However, using the same tests to cover other capabilities, such security, and speed, will provide the design’s current network error-free coverage. For instance, you may see the latency of the load, get-pull from APIs, and other factors while testing the performance of the same process with data that exceeds a product’s maximum limitations.

- Data-Driven Testing is essential because testers often use many pieces of data for a single test, and creating unique tests for each item of data might take a lot of effort. The creation of test results may be enhanced by using data driven testing, which enables data to be kept out of test scripts and allows the same test scripts to be run for different permutations of input test data.

- Data-driven testing is applicable to all development phases. Usually, all issues related to data-driven testing are handled in a single process. However, it may be used in a variety of test scenarios.

It enables programmers and testers to keep the test cases’ or scripts’ logic and test data separate. It is possible to run the same test cases several times, which helps reduce the number of test cases and scripts. The test data are unaffected by changes to the test script.

- The applying team’s proficiency with automation determines the test’s level of quality. Data validation takes a lot of time when testing massive volumes of data. Because Data-Driven testing requires a tremendous amount of code, maintenance is a huge barrier. High-level technical proficiency is required. A tester may need to pick up a brand-new programming language. There will be further writing. The most frequent subjects are script administration, testing infrastructure, and testing results. A text editor like Notepad is required to create and keep data files.

- The framework for data-driven automated testing may be created concurrently with or before the real application is created. Due to the fact that we’ll be working with parameterized variables, independent test data sheets or files, and distinct test scripts. The creation of the framework is separate from the AUT itself. This procedure is made simpler if an automation tool like testsigma is utilized for the DDT. Expertise and effort are required to construct test scripts for reading and writing test data from files as well as test function scripts.

- Observing user behavior in actual scenarios is the core goal of data-driven testing. Test your web and mobile apps on actual hardware, operating systems, and browsers for the best user experience. Building an internal testing infrastructure is costly and fraught with problems with scalability and operation. Therefore, it is better and more economical to use a real device cloud for data-driven testing than than an internal device lab. Cloud testing platforms like LambdaTest may satisfy your testing requirements in this case.

With more than 3000 desktop and mobile web browsers accessible online, you can test your websites and web apps. Software testing becomes a manageable and scalable procedure since it makes the cloud environment easy and accessible. Cross-browser testing tools like LambdaTest provide you access to an online device farm for your mobile and web testing needs. For mobile testing, you can test apps on cloud-based Android Emulator, iOS Simulator, and on real device cloud.

Summing up!

Let’s look at an example to quickly grasp the concept of data-driven testing. Consider requesting login credentials if both a user name and a password are needed. If the user name and password are both accurate, the login will be successful, and the home page will be shown.

Another situation that can be considered is when the username as well as the password are correct but the login activity fails, displaying an invalid credential and preventing access to the main page. Next, if both the password and username are right, the login will not work.

The next conclusion of the logic is that it is a control flow procedure for verifying the functions and conditions. The final location of the test scripts is then obtained, and an automation code is put into place for the circumstances. The test scripts operate and take actions as expected.

A team of programmers sat down to begin coding the code for their ground-breaking application concept a very long time ago, in the late 1990s to be precise. A few weeks later, they decided to talk to one another about their code review and discovered that they had written some of the same code. For example, each of them created code for a radio button. They discovered that code may be reused and that creating code ten times for the same radio button is both unnecessary and an enormous waste of time and resources. As a result, reusable code libraries or frameworks were produced. The usage of frameworks in DDT helps us to easily retrieve already existing code rather than building it from scratch. It is essential to note that you don’t have to be a skilled programmer to use the software, whether it’s to retrieve a script or to quickly detect and correct mistakes in a script.

Follow Me

Unleashing the Power of the Office Accelerator: Maximizing Productivity and Efficiency in the Workplace with Office 365 Accelerator

Unlocking the Hidden Potential of Your Website: Strategies for Growth

From AI to VR: How Cutting-Edge Tech Is Reshaping Personal Injury Law in Chicago

Trending

Microsoft4 years ago

Microsoft4 years agoMicrosoft Office 2016 Torrent With Product Keys (Free Download)

Torrent4 years ago

Torrent4 years agoLes 15 Meilleurs Sites De Téléchargement Direct De Films 2020

Money3 years ago

Money3 years ago25 Ways To Make Money Online

Torrent4 years ago

Torrent4 years agoFL Studio 12 Crack Télécharger la version complète fissurée 2020

Education3 years ago

Education3 years agoSignificado Dos Emojis Usado no WhatsApp

Technology4 years ago

Technology4 years agoAvantages d’acheter FL Studio 12

Technology4 years ago

Technology4 years agoDESKRIPSI DAN MANFAAT KURSUS PELATIHAN COREL DRAW

Education3 years ago

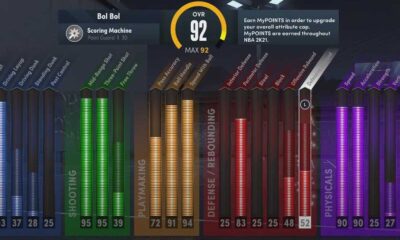

Education3 years agoBest Steph Curry NBA 2K21 Build – How To Make Attribute, Badges and Animation On Steph Curry Build 2K21

You must be logged in to post a comment Login